0xDEAFBEEF

Guide for Reconstructing Media: Series 0 -5

The following guide applies to Series 0 - 5: Synth Poems, Angular, Transmission, Entropy, Glitchbox and Advection.- For reconstructing Noumenon, instead follow this guide.

- For reconstructing Chronophotograph, instead follow this guide.

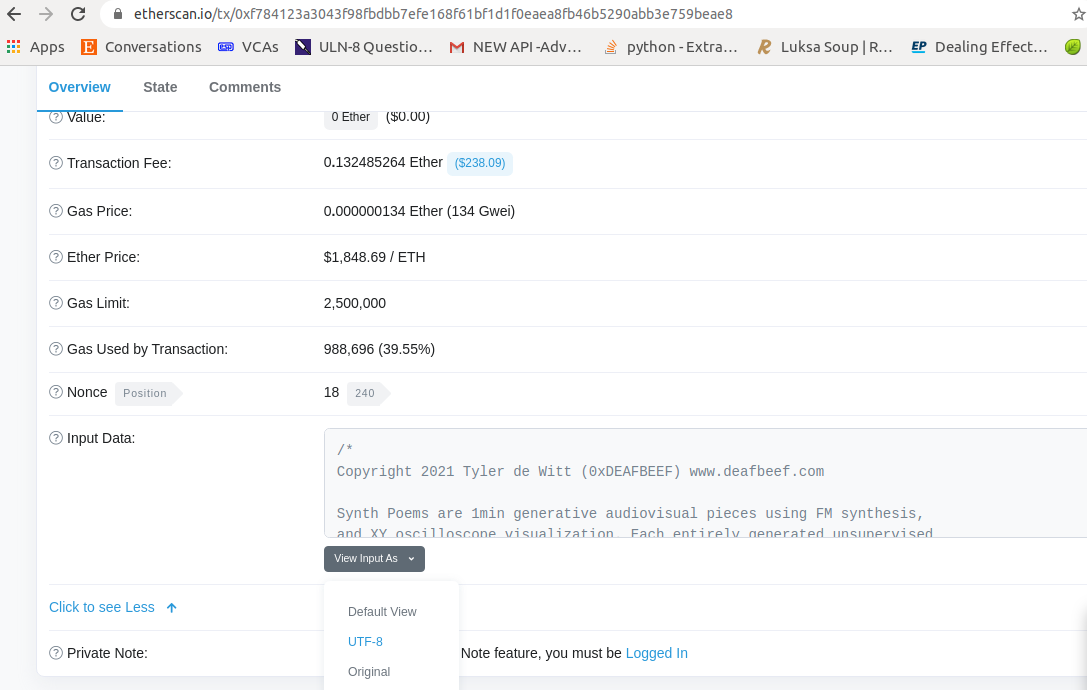

Step 1: Obtain the C source code file and your token's random hash value

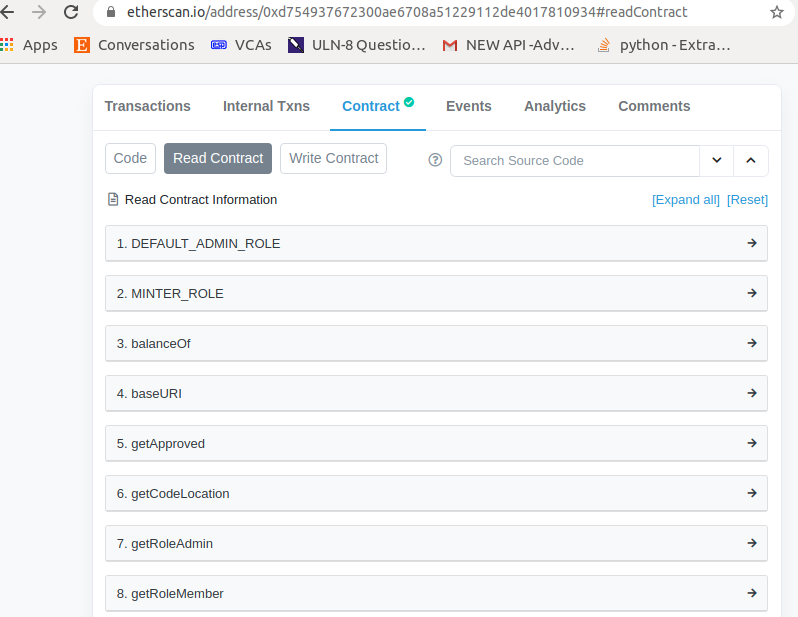

Use a web3 client or etherscan.io to interact with the contract at https://etherscan.io/address/0xd754937672300ae6708a51229112de4017810934.

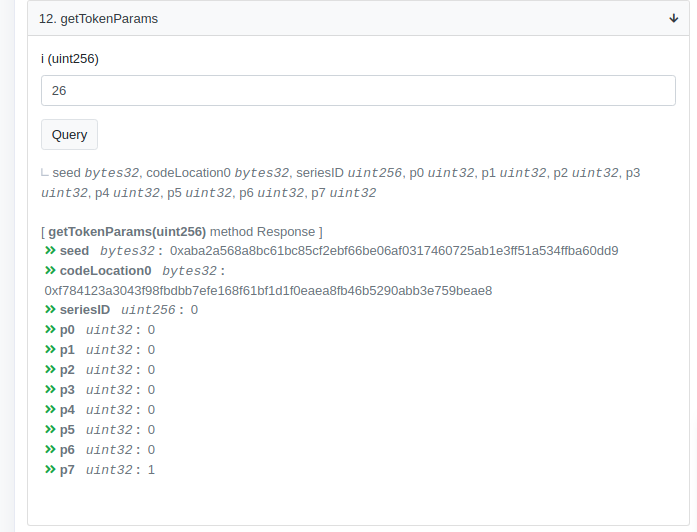

Use the getTokenParams() function, passing your token ID.

Step 2: Replace the 'seed' variable with token's unique random hash

Near the top of the C source file, there is a line:char *seed="0x2c68ca657f166e1e7a5db2266ee1edca9daf2da5ecc689c39c1d8cfe0b9aad2e";Replace that value with the 'seed' value previously read through getTokenParams().

Step 3: Install a C compiler for your platform.

Linux is the recommended platform, and GNU GCC is the recommended C compiler, available through default package managers. On Mac, you may be able to use Homebrew. On Windows, try GNU GCC binaries.Step 4: Compile and run the program.

Code conforms to the ISO c99 standard. Only library dependency is C math library. Pass '-lm' to gcc. Assumes architecture is little endian (all Intel processors)

First, create directory to store output images:$ mkdir 'frames'Next, compile and run. Optionally pass '-Ofast' flag to enable optimization (faster rendering).

$ gcc -Ofast main.c -lm && ./a.out

This will produce:

a. Numbered image files in BMP file format stored in 'frames' directory. 24 FPS(frames

per second)

b. Audio file named 'output.wav' in WAV format, stereo, 44.1khz, 16bit depth

This is the 'raw' information representing digital audio signals and image pixel intensities.

Step 5: Encode raw output to chosen media format(MP3, MP4, JPG, PNG, etc)

This raw information can be encoded perceptually using whatever tools exist at the time of reconstruction. At present, for example, opensource tools such as imagemagick and ffmpeg can be used to encode images,video and audio in different format for popular distributions. Linux platform is recommended, ffmpeg is available from default package manager. On Mac platform, use Homebrew to install ffmpeg.

Examples:

1. Audio can be encoded to MP3. $ ffmpeg -i output.wav -b 192k output.mp3 2. Images can be assembled into an animation and encoded to MP4, for example: $ ffmpeg -framerate 24 -i frames/frame%04d.bmp -crf 20 video.mp4 3. audio can be encoded with video: $ ffmpeg -i output.wav -i video.mp4 -c:v libx264 -c:a aac -b:a 192k -pix_fmt yuv420p -shortest audiovisual.mp4 4. Screenshots of particular frames(in this example, frame 60), using imagemagick: $ convert frames/frame0060.bmp -scale 720x720 image.png